Day 1

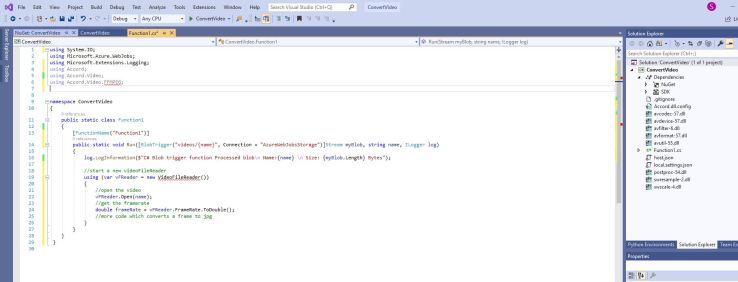

The sticking point is turning into a mountain, converting video to images using an Azure serverless function.

I have found another library wrapper for FFMpeg, called NRico, but this has 2 versions, a free version using .Net fundamentals and a .Net core version for $75.

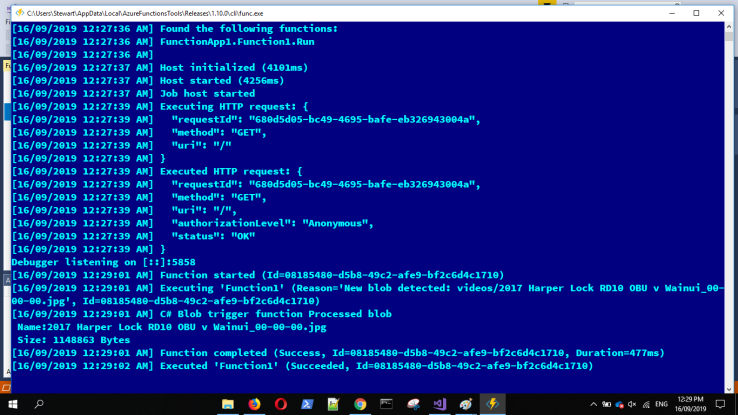

I will have a go building a Version 1 function app. and see what happens.

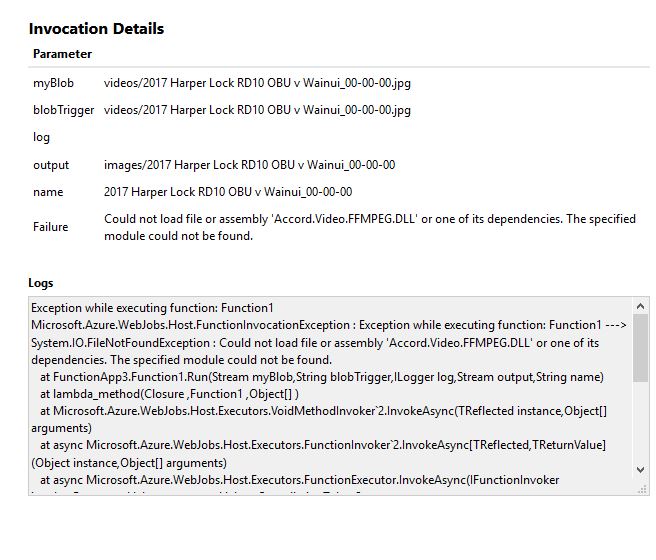

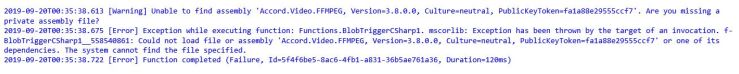

MS advisors got back to me regarding Fridays errors with a version 1 function running Accord FFMpeg. They used my code snippets and built the App in their own environment, they have the same errors as me and are unable to run the function, at least I know its not just me and my computer. They have passed the problem higher up the chain.

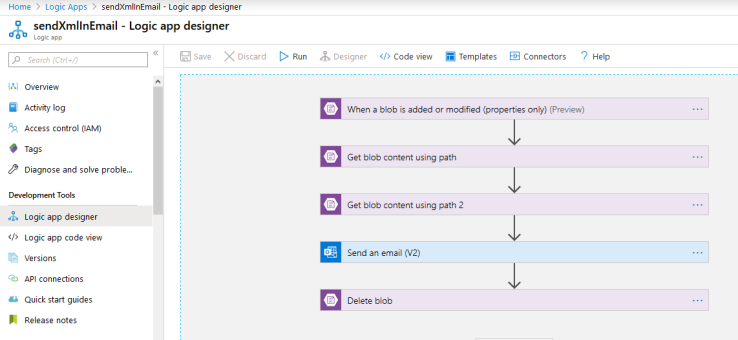

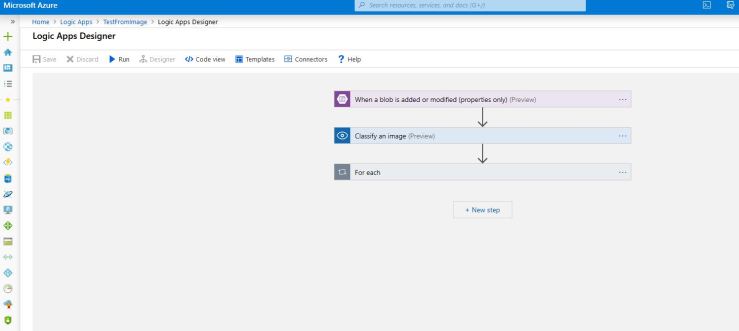

Some more advice from MS, I can consider using a Container Instance to run the code. A container instance works in a logic App and can be event driven. An event can start a container instance which would then run the conversion code, the container stops when the code is finished. Billing is per second for the life of the container. One issue is the container instance needs a docker image to launch an instance.

After some research, this is a complicated solution to my problem and is a potential solution to the final product but a little too involved for my project.

Day 2

Further exploration into NReco and a few other FFMpeg wrappers that are available as NuGet packages with GitHub examples and support.

The biggest difficulty I have is getting the metadata out of a video file with these other wrappers.

I am unable to get the frame rate and duration data that I need to identify a specific frame in a video, this means my labeling code would not work to add a time to an event.

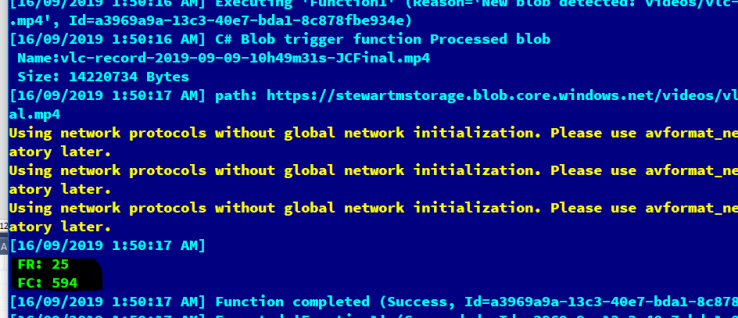

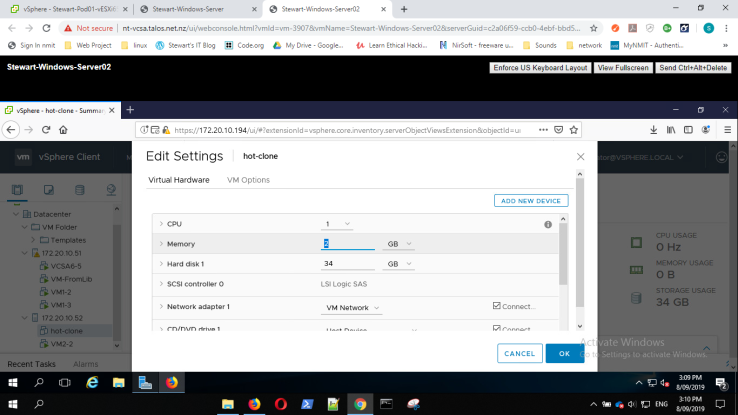

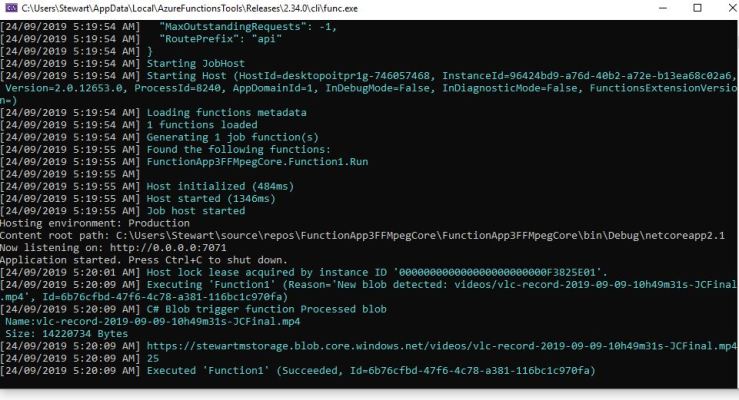

Using FFMpegCore, I was eventually able to get a frame rate, but there are issues with saving files to a container, saving to a URL is tricky.

I also experimented with FFMediaToolkit, with no success.

Day 3

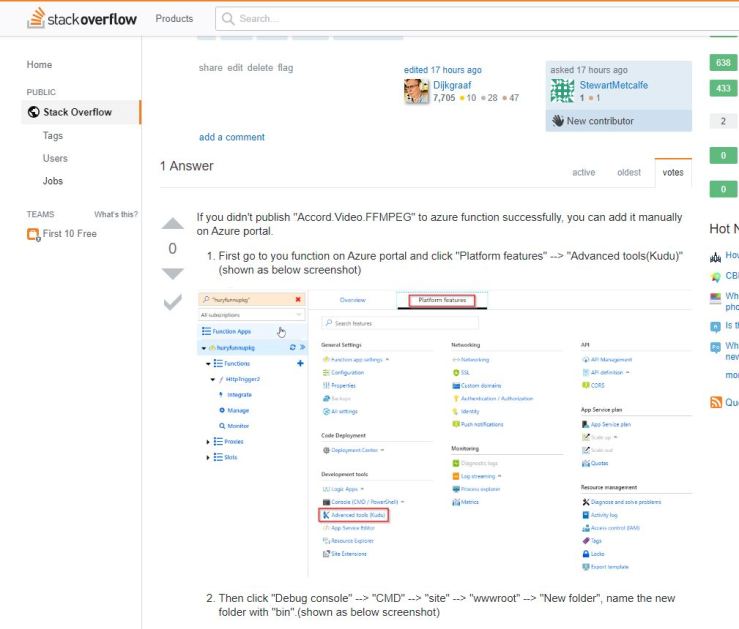

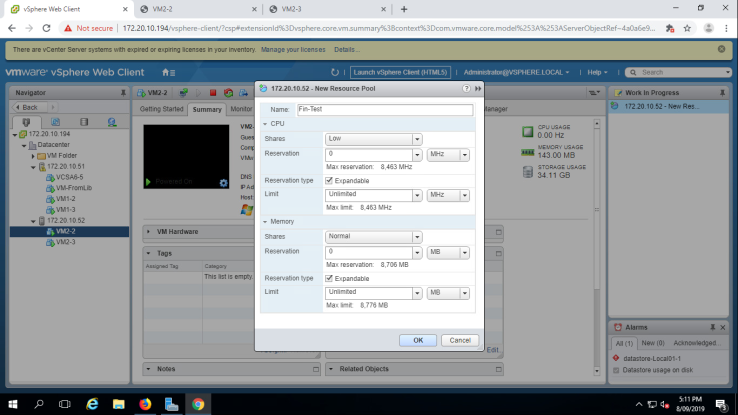

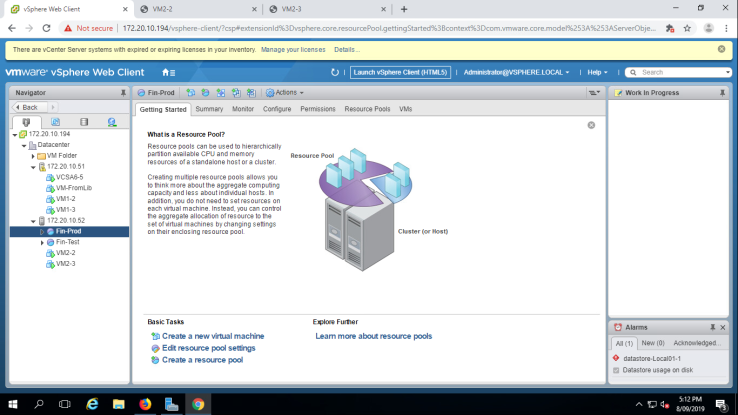

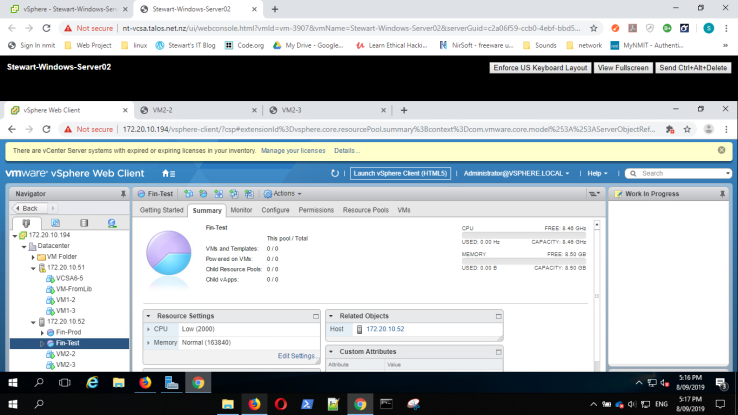

A little fiddling around with NReco and saving the images using Kudu services. Kudu Services is the environment in which the Function App resides and has a console and user interface which allows exploration of the directory structure of a Function, I can add folders to this directory and save files here.

I was able to get NReco working in a function App, it takes a URL as an input, the URL of a video in a container and converts a frame every second into a jpg.

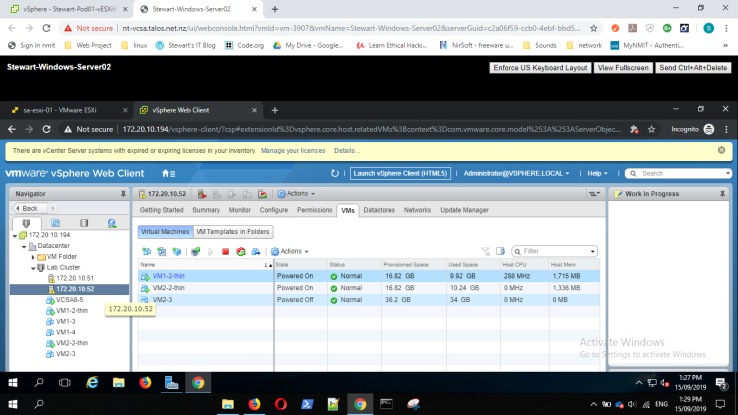

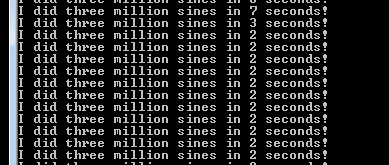

The function “timed out” after 5 mins, the default running time of an App, unfortunately MS Azure didn’t work as expected the function ran incredibly slow, taking about 2 seconds to extract a frame and save an image, so not the scaling and speed I expected. This means that a Function App will never handle a 3Gb video as MS initially suggested it would. This also means that Accord FFMpeg will have the same problem in a Function.

Back to the drawing board, for the project I will convert the video to frames to images on a local machine and then upload the images to a container.

Day 4

For the project, I have abandoned video – image conversion on Azure.

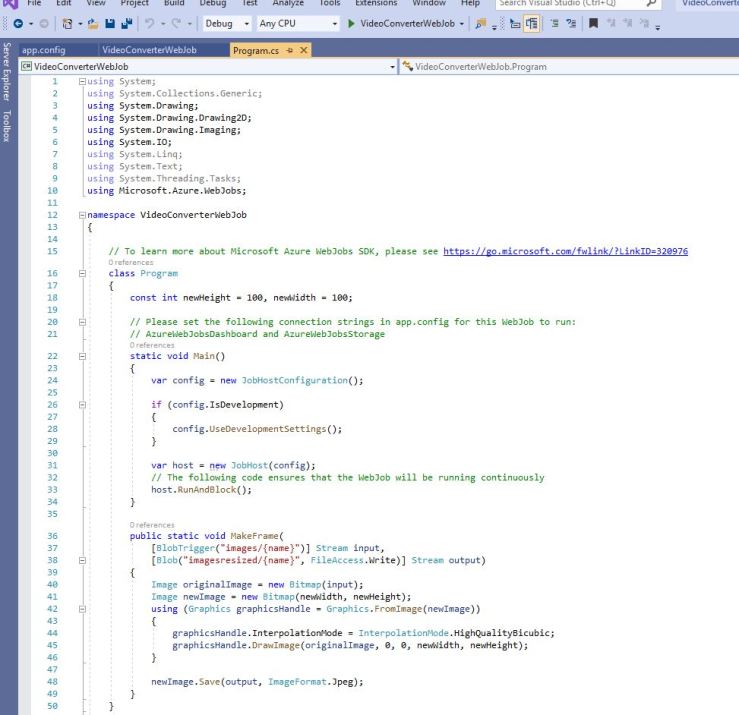

I have combined the Conversion and Upload code into 1 app.

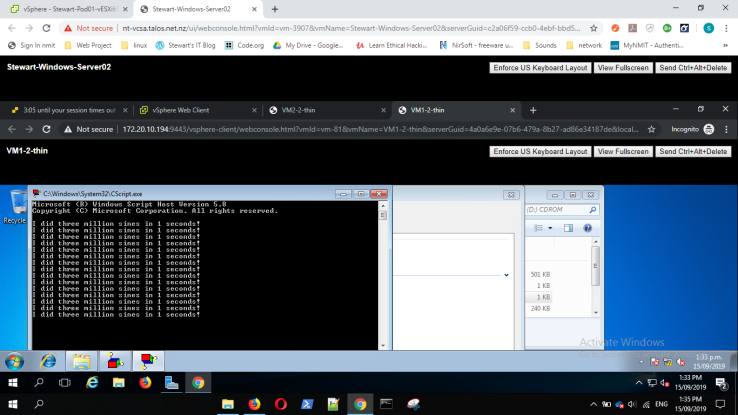

I have also changed the logic a little so now the app only reads every 25th (or 30th depending on the frame rate), previously I was reading every frame but only saving every 25th/30th, this saves loading 24 frames into memory, hopefully will achieve a speed advantage.

I ran the App with a 3GB video, this took 50 mins to convert and then upload the images to Azure.

This would be an ideal place for investigating multi-threading and asynchronous tasks in future, convert and upload at the same time, instead of the current convert all and then upload all.

I have also managed to get a progress bar working on the image up-loader using threading and “BeginInvoke”, another learning experience.

I have no error checking yet and I can upload a video to a picture container! This will have to be changed so that the video goes to a video container obviously.

Final Words for a while

Next week starts a mid-semester 2 week study break.

I will take this opportunity to recharge my batteries a little. I will still be spending some days at the work place to work through the challenges I have encountered, but will be taking a break from blogging.

So, anticipate the next blog in week 12, after the start of the week on 14 October.